After I wrote my last post, I was talking with Donnie Berkholz as we traveled to FOSDEM. Donnie commented on how powerful of a post it was, yet it left the reader hanging. He, and other readers, wanted more. So I’ve taken the liberty of breaking down more of the reasons Enterprise IT needs a “special kind of DevOps” as posted by Andi Mann. I don’t want anyone to think I am picking on Andi personally. Rather, his post reminds me of all the excuses Enterprises give as to why “We can’t change”. As Mick told Rocky, “There ain’t no can’ts!”

- They cannot achieve the same levels of agility and personal responsibility as a smaller or less complex organization.

Why Not? Principles that teach agility and speed have long been used at large companies such as Microsoft. (Yes, feel free to say Microsoft is a bad example, they are still one of the world’s largest software companies.) Additionally, if one doesn’t want to take personal responsibility for what they produce for a company, maybe they are in the wrong job for the wrong company?

- They cannot stream new code into production and just shut down for a couple of hours to fallback if it fails.

This is fool-hardy to begin with. The goal of methods such as Continuous Integration is to be constantly building releases and testing them to catch problems before they are released to production. Also, the idea is to test small changes, so you know exactly what breaks, rather than large chunks of code. Large enterprises “cannot stream new code” because they haven’t built the necessary flows in front of production releases to effectively and efficiently test and verify code changes. This requires IT organizations to fully automate their processes all the way down to server builds, a process they often are incapable of doing because of an attachment to the “old way of doing things”.

- They rarely ever have ‘two pizza teams’ for development or operations (indeed, they are lucky if they have ‘two Pizza Hut teams’).

The size of the team is nearly always irrelevant. Within each Pizza Hut there are tables, and each table consumes the pizza buffet. The goal of DevOps is to increase the flow of the work through those tables so the teams can eat their pizza and leave quicker. As I’ve said before, focusing on the Silos is the wrong way to solve the problem. Rather focus on the grain elevators that move the grain to produce something meaningful.

- They cannot sign up for cloud services with a credit card without exceeding their monthly limit and/or being fired.

Get an MSA/PO with the cloud vendor or build a Private Cloud. Cloud or no cloud, building strong automation on top of existing VM or server infrastructure can help alleviate many problems in service delivery.

- They cannot allow developers to access raw production data, let alone copy it to their laptop for development or testing.

Scrub the data. DevOps or not, this is a problem that we’ve solved years ago. When I worked at a major e-commerce site, real data was often required for testing, but that data was always cleaned of any sensitive PII. This is not an issue that is unique to DevOps.

- They cannot choose to stream new code into production in violation of a change freeze, or even without the prior approval of a CAB.

Once again, one assumes that DevOps is all about willy nilly pushing of code to production. One aspect of DevOps is about increasing the flow of the work through the system by optimizing the centers where value is added. As I’ve discussed before, principles and practices of DevOps actually help things like Change Control.

- They cannot just tell developers to carry pagers ‘until their software is bedded in’ (not least because their developers have always carried pagers, and on a full-time basis).

If Devs already carry pagers, then they’ve already been told to carry pagers, hence, “they” can indeed tell their Devs to carry pagers. Additionally, bedding in of the software should happen in the lower environments as discussed previously. If you’ve done things right before production, pagers become a tool that is used when things go really badly. It’s a form of monitoring and incident response that becomes meaningful again because you aren’t being paged for endless break fix work.

- They cannot put developers and operators together because one team works 24×7 shifts in 7data centers while the other works 16-hour days in 12 different locations.

Well, good, they at least have 16 hours a day together. Highly distributed remote teams are becoming more and more common. Technology is evolving to help bring this concept of remote work and people are finding creative ways to work around it. I’m also against the idea that DevOps is all about merging dev and ops onto one team, because that is not the point. The idea, as already stated, is to increase the flow of work between Dev and Ops and build a culture of continuous improvement between the two groups (three groups if you include the business). Dev, Ops, Business, who gives a shit. The point is working towards a common goal, no matter where you sit.

What large IT shops cannot do is be satisfied anymore with the status-quo. They cannot accept the ways of the past any longer, and they have to start thinking about blowing up their way of doing things. They cannot let the castles and fiefdoms of the past get in the way any longer.

I think the single most powerful question any IT shop can ask themselves is, “What if everything we’ve been doing over the last X years is completely wrong?” Start there, and reevaluate everything you’ve been doing to achieve (or not achieve) the results your customers require.

Tom McLaughlin – Twitter – LinkedIn

Tom McLaughlin – Twitter – LinkedIn

Joe Beda –

Joe Beda –  Adam Auerbach –

Adam Auerbach –  Tapabrata Pal (Topo) –

Tapabrata Pal (Topo) –

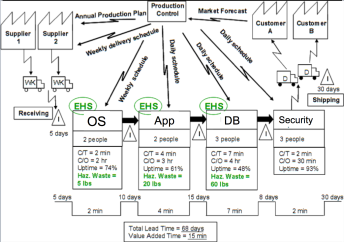

professional movement. And that is where Value Stream Mapping and the ideas of Lean come into the conversation. Books like the “

professional movement. And that is where Value Stream Mapping and the ideas of Lean come into the conversation. Books like the “